«A picture is worth a thousand words» is an English saying meaning that complex and sometimes multiple ideas can be expressed by a single still image, which conveys its meaning or essence more effectively than a mere verbal description.[1]

Images are great if you can see them. Search engines and the visually impaired, can not. By adding alt text and image descriptions, more people can access your content and your content might rank higher with Google. So, if your website has tens of thousands of images without alt texts, going back and adding them sure is a lot of work. What if you could auto-generate them? Turns out you can!

I've created a module for Episerver that use Microsoft Azure Cognitive Services, Computer Vision API in combination with Translator Text API, to generate various metadata for images.

Getting started

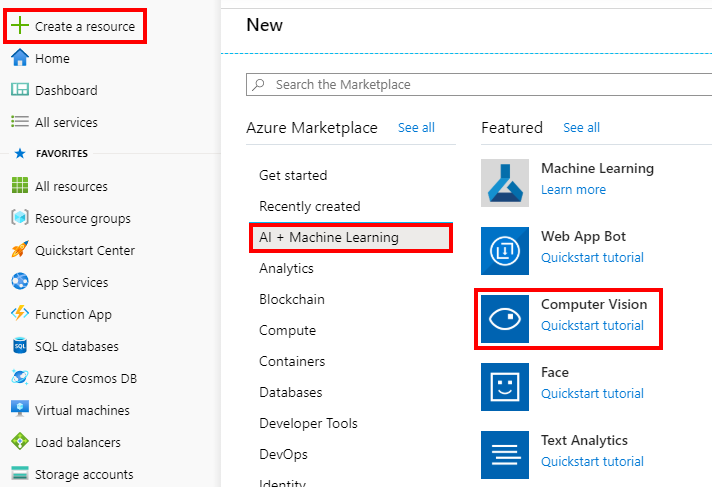

I'll start by creating an Azure Cognitive Services Computer Vision resource using the Azure portal. It's as easy as clicking here, here and here:

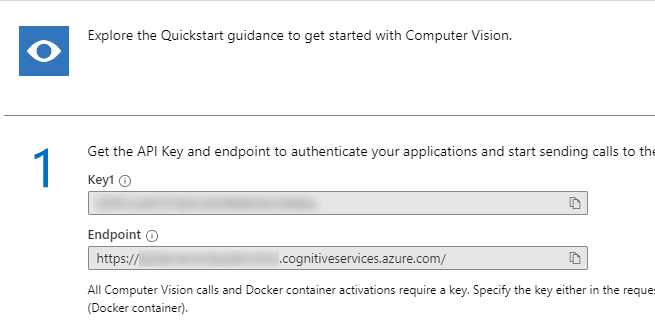

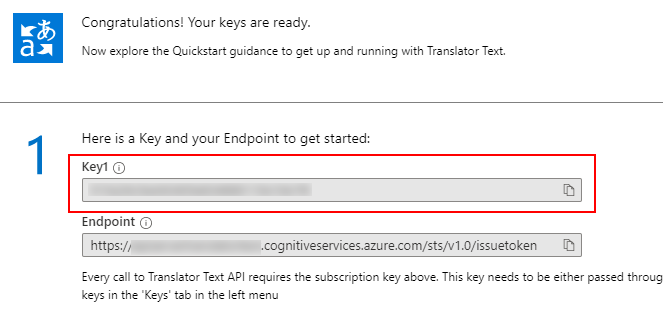

You will need these two values for configuration:

Add them to the «appSettings» region of web.config like this:

<add key="Gulla.Episerver.AutomaticImageDescription:ComputerVision.SubscriptionKey" value="abcdefgh1234567890abcdefgh1234567890" />

<add key="Gulla.Episerver.AutomaticImageDescription:ComputerVision.Endpoint" value="https://foo.cognitiveservices.azure.com/" />Supported languages are limited, so if we want the result translated, we add another Azure Cognitive Services Translator Text resource. If you're happy with English image metadata, you may skip this step.

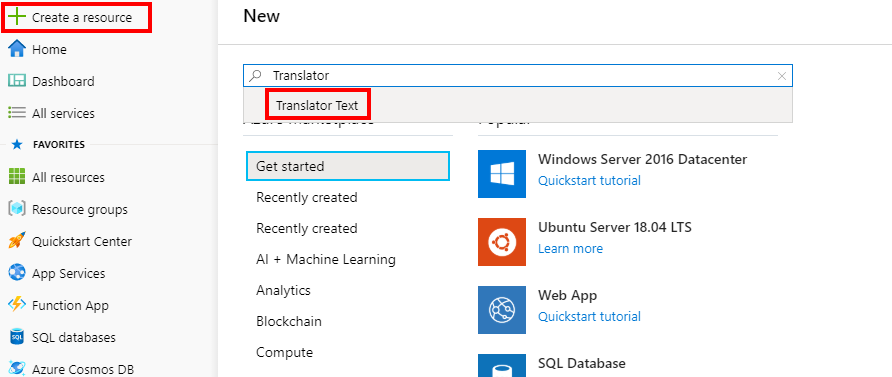

Click «Create a resource», and then search for «Translator Text»:

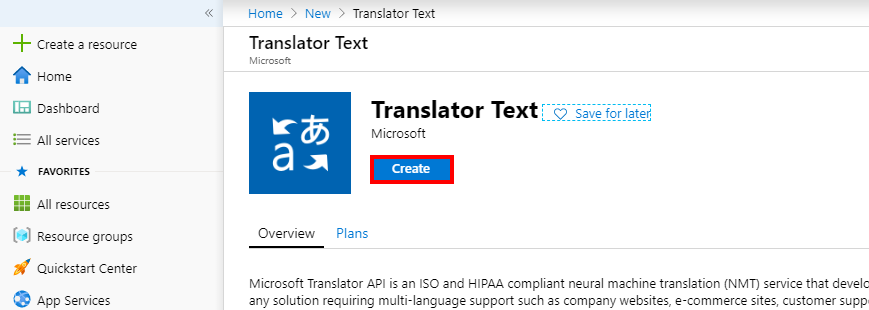

Finally, create the resource:

You will need this value for configuration:

Add it to the «appSettings» region of web.config like this:

<add key="Gulla.Episerver.AutomaticImageDescription:Translator.SubscriptionKey" value="abcdefgh1234567890abcdefgh1234567890" />Then add the NuGet package Gulla.EpiserverAutomaticImageDescription, available on nuget.episerver.com, to your project.

Specifying what metadata to generate

In your Episerver solution, image files are stored as any other content in the database. Take this example from the Alloy demo site.

namespace Alloy.Models.Media

{

[ContentType]

[MediaDescriptor(ExtensionString = "jpg,jpeg,jpe,ico,gif,bmp,png")]

public class ImageFile : ImageData

{

}

}The metadata you want to automatically generate are added as properties to this content type, and the properties are then decorated with specific attributes that will populate the properties with data when images are uploaded. Attributes may also be added to block types, used as local blocks on your image content type.

Each attribute will handle one type of metadata. Some attributes may be added to several types of properties (i.e. both string and bool). The same attributes may be used more than once. The module includes nine different attributes, but you can easily add your own inheriting from the provided base class.

A simple example. Adding the AnalyzeImageForDescription-attribute to a property will get a description/caption that describes the image. This may be used as an alt text for images. The property gets its value when the image is uploaded. The value may then be checked, and (if necessary) adjusted by the editor.

[AnalyzeImageForDescription]

public virtual string Description { get; set; }The different attributes

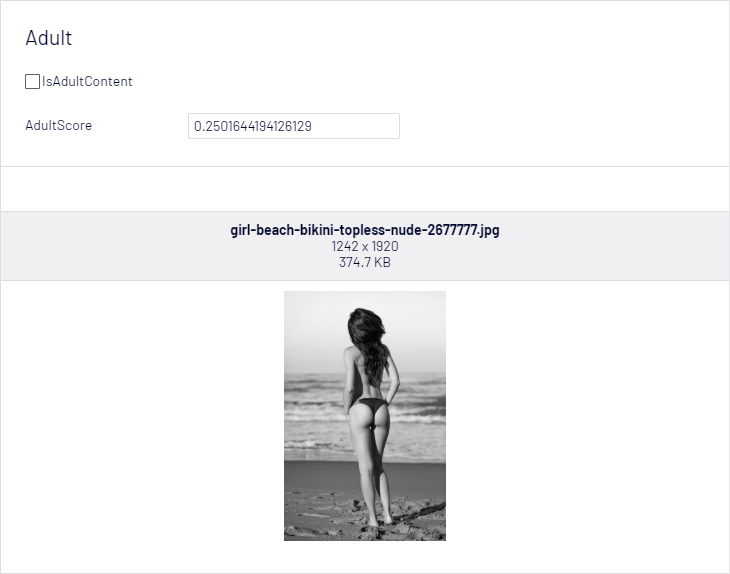

[AnalyzeImageForAdultContent]

This attribute will try to identify adult content. Adult images are defined as those which are explicitly sexual in nature and often depict nudity and sexual acts.

May be added to the following property types:

- Bool: True/false indicating if the image has adult content.

- Double: A value ranging from 0.0 to 1.0 indicating Adult Score.

- String: A value ranging from 0.0 to 1.0 indicating Adult Score.

Example:

public class AdultBlock : BlockData

{

[AnalyzeImageForAdultContent]

public virtual bool IsAdultContent { get; set; }

[AnalyzeImageForAdultContent]

public virtual double AdultScore { get; set; }

}

Image by Claudio_Scott from Pixabay

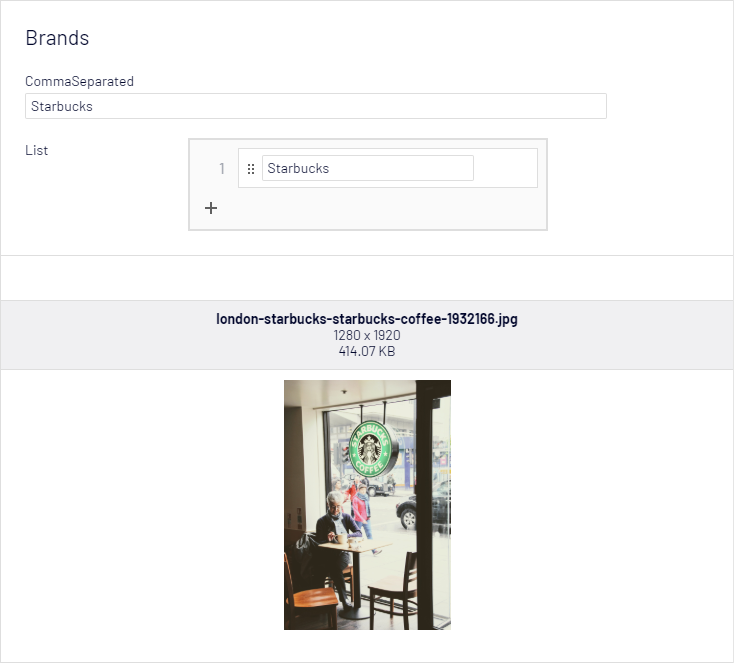

[AnalyzeImageForBrands]

This attribute will try to identify brands in the image. Brand detection is a specialized mode of object detection that uses a database of thousands of global logos to identify commercial brands.

May be added to the following property types:

- String: A comma-separated list of brands.

- String-list: A list of brands.

Example:

public class BrandBlock : BlockData

{

[AnalyzeImageForTags]

public virtual string CommaSeparated { get; set; }

[AnalyzeImageForTags]

public virtual IList<string> List { get; set; }

}

Image by Peggy und Marco Lachmann-Anke from Pixabay

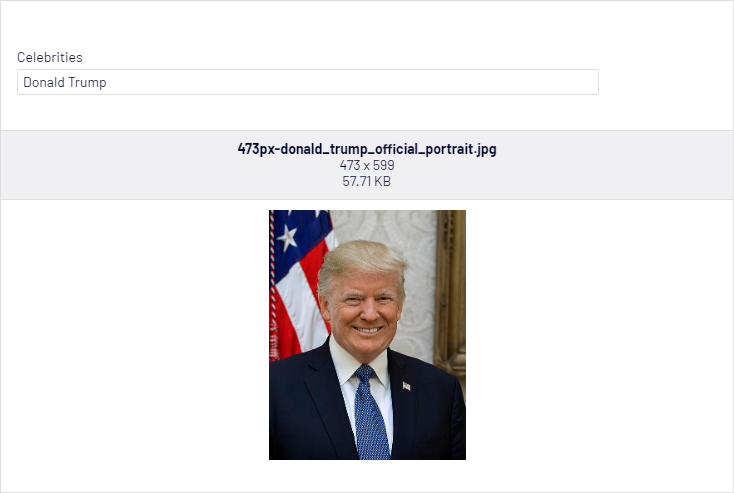

[AnalyzeImageForCelebrities]

This attribute will try to identify and name celebrities present in the image.

May be added to the following property types:

- String: A comma-separated list of celebrities.

- String-list: A list of celebrities.

Example:

[AnalyzeImageForCelebrities]

public virtual string Celebrities { get; set; }

[AnalyzeImageForDescription]

This attribute will describe the image in a human-readable language. Suitable for captions and alt texts.

May be added to the following property types:

- String: A description of the image.

Parameters:

- languageCode: Passing a parameter from the TranslationLanguage-class, you may choose between about 60 different languages. Optional. English is default.

- upperCaseFirstLetter: If the description should start with an uppercase character. True is default.

- endWithDot: If the description should end with a dot. True is default.

Example:

public class DescriptionBlock : BlockData

{

[AnalyzeImageForDescription]

public virtual string English { get; set; }

[AnalyzeImageForDescription(TranslationLanguage.Norwegian)]

public virtual string Norwegian { get; set; }

[AnalyzeImageForDescription(TranslationLanguage.Spanish)]

public virtual string Spanish { get; set; }

[AnalyzeImageForDescription(TranslationLanguage.ChineseTraditional, false, false)]

public virtual string Chinese { get; set; }

[AnalyzeImageForDescription(TranslationLanguage.Arabic, false, false)]

public virtual string Arabic { get; set; }

}

Image by paulohabreuf from Pixabay

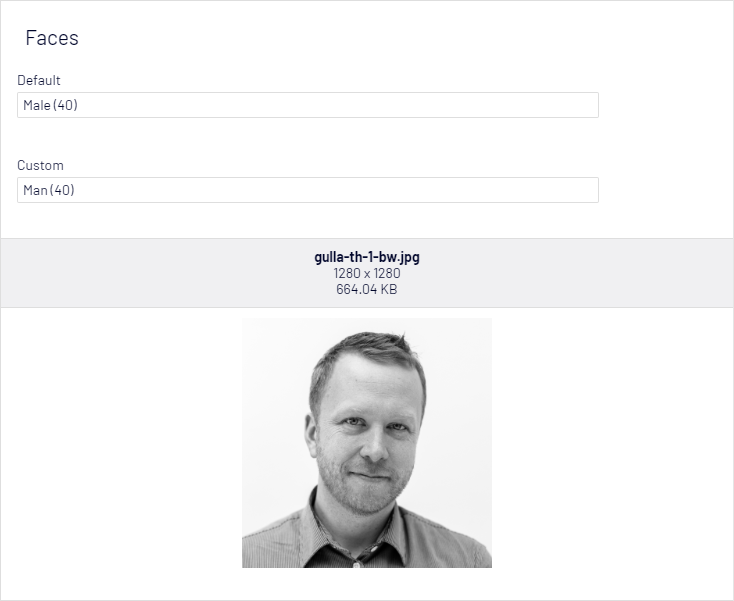

[AnalyzeImageForFaces]

This attribute will detect faces, and estimate age and gender for each.

May be added to the following property types:

- String: A comma-separated list of gender/age pairs.

- String-list: A list of gender/age pairs.

Parameters:

- languageCode: per default, the face description will be in the format «Male (40)». Passing a parameter from the TranslationLanguage-class, you may choose between about 60 different languages. Optional. English is default.

Alternative parameters:

Male/female are not always the preferred word, or the default translations to your language may not be the best. Maybe children should be differentiated from adults. Use the alternative constructor!

- maleAdultString: How to name a male adult, e.g. «Man».

- femaleAdultString: How to name a female adult, e.g. «Woman».

- otherAdultString: How to name other adults, e.g. «Person».

- maleChildString: How to name a male child, e.g. «Boy».

- femaleChildString: How to name a female child, e.g. «Girl».

- otherChildString: How to name other children, e.g. «Child».

- childTurnsAdultAtAge: At what age you consider a person to become an adult.

Example:

public class FaceBlock : BlockData

{

[AnalyzeImageForFaces]

public virtual string Default { get; set; }

[AnalyzeImageForFaces("Man", "Woman", "Person", "Boy", "Girl", "Child", 18)]

public virtual string Custom { get; set; }

}

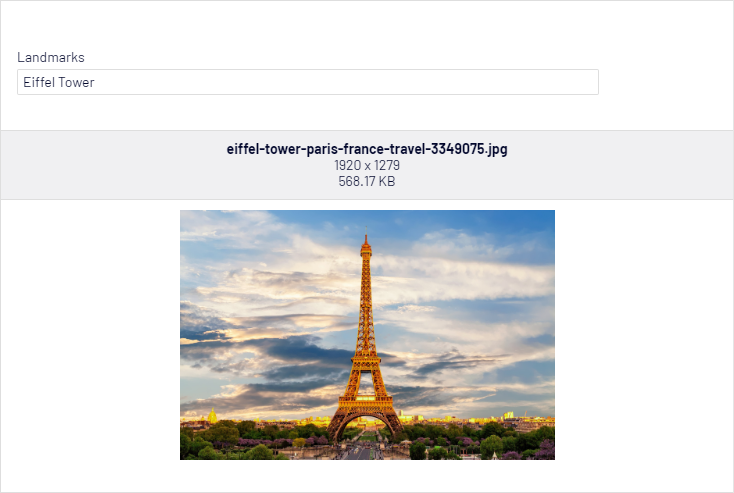

[AnalyzeImageForLandmarks]

This attribute will try to identify and name landmarks present in the image.

May be added to the following property types:

- String: A comma separated list of landmarks.

- String-list: A list of landmarks.

Example:

[AnalyzeImageForLandmarks]

public virtual string Landmarks { get; set; }

Image by Pete Linforth from Pixabay

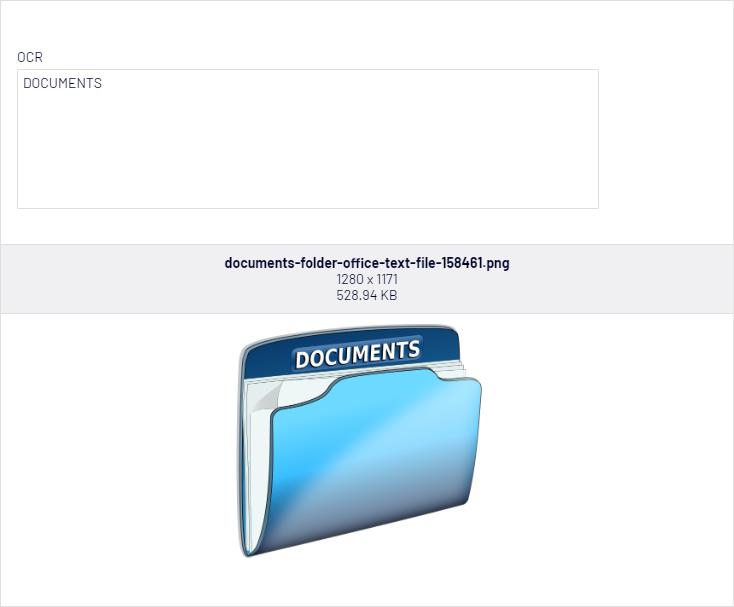

[AnalyzeImageForOcr]

This attribute will use OCR (Optical Character Recognition) to identify words written in the image. It is not optimized for large documents but can recognize quite a few languages.

May be added to the following property types:

- String: A string containing the OCR result.

Parameters:

- toLanguage: Per default, the OCR result will be presented in the same language as the image. If you want, you may choose to translate it to another language. Passing a parameter from the TranslationLanguage-class, you may choose between about 60 different languages. Optional.

- fromLanguage: If you do not specify the origin language, Azure Cognitive Services will try to detect the language. If you know all images will have text in the same language, you may specify the origin langue for better translations results, especially for shorter texts. Optional.

Example:

[UIHint(UIHint.Textarea)]

[AnalyzeImageForOcr]

public virtual string OCR { get; set; }

Image by OpenClipart-Vectors from Pixabay

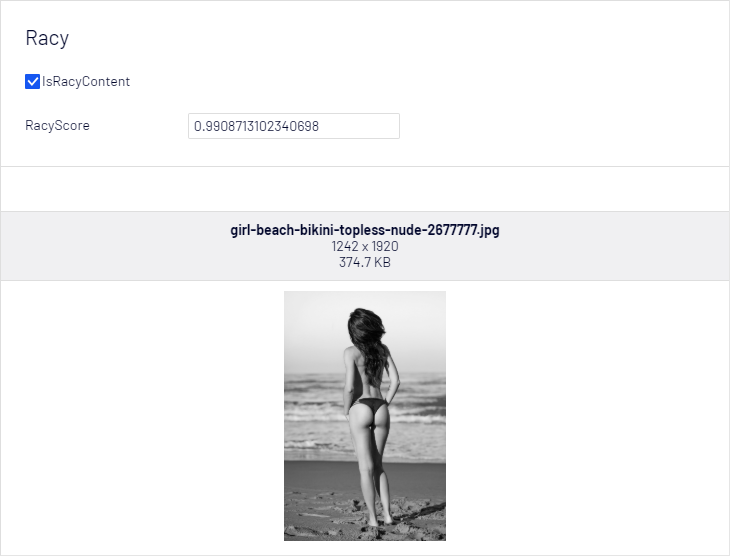

[AnalyzeImageForRacyContent]

This attribute will try to identify racy content. Racy images are defined as images that are sexually suggestive in nature and often contain less sexually explicit content than images tagged as Adult.

May be added to the following property types:

- Bool: True/false indicating if the image has racy content.

- Double: A value ranging from 0.0 to 1.0 indicating Racy Score.

- String: A value ranging from 0.0 to 1.0 indicating Racy Score.

Example:

public class RacyBlock : BlockData

{

[AnalyzeImageForRacyContent]

public virtual bool IsRacyContent { get; set; }

[AnalyzeImageForRacyContent]

public virtual double RacyScore { get; set; }

}

Image by Claudio_Scott from Pixabay

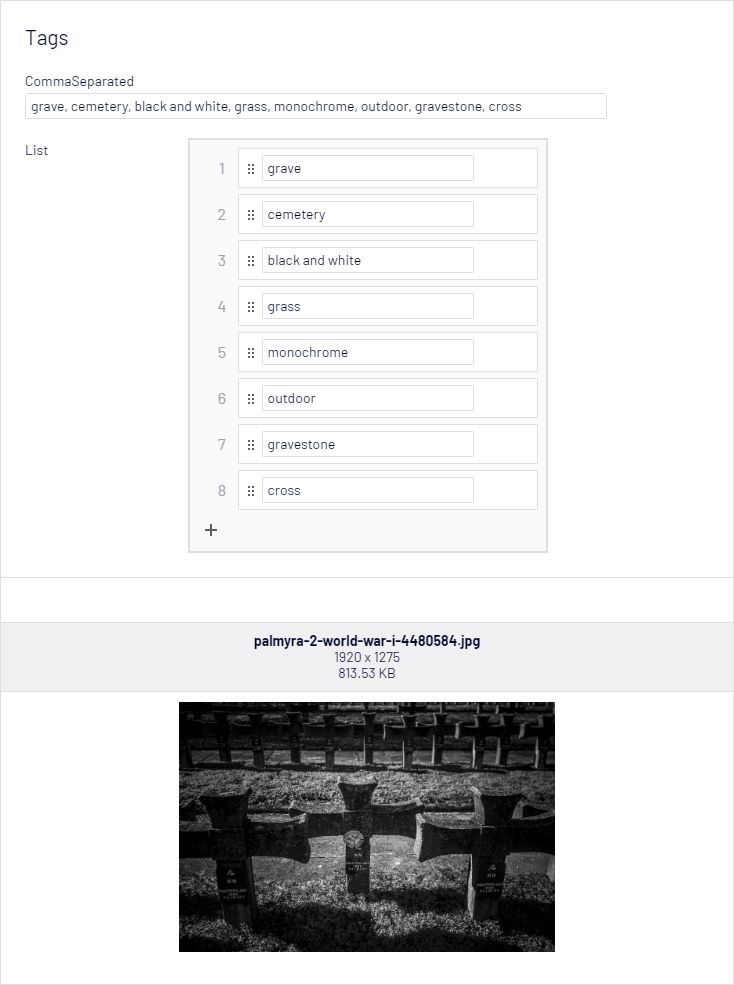

[AnalyzeImageForTags]

This attribute will return tags that describe the image. Azure Computer Vision returns tags based on thousands of recognizable objects, living beings, scenery, and actions

May be added to the following property types:

- String: A comma-separated list of tags.

- String-list: A list of tags.

Parameters:

- languageCode: Passing a parameter from the TranslationLanguage-class, you may choose between about 60 different languages. Optional. English is default.

Example:

public class TagBlock : BlockData

{

[AnalyzeImageForTags]

public virtual string CommaSeparated { get; set; }

[AnalyzeImageForTags]

public virtual IList<string> List { get; set; }

}

Image by Kamil Cyprian from Pixabay

Adding metadata to existing images

When adding attributes to properties, those properties are populated with metadata when the image is uploaded. So what about images that were uploaded before you started using this module?

You may add the NuGet package Gulla.Episerver.AutomaticImageDescription.ScheduledJob that (surprise!) will give you a scheduled job that can generate metadata, based on the attributes, for your existing images. When the job is done, simply remove the NuGet package, to get rid of the job from admin mode.

Per default, the job will only send 20 requests to the Computer Vision API, so it's possible to stay inside the free tier. To adjust the throttling, add the following line to the appSettings-region of your web.config.

<add key="Gulla.Episerver.AutomaticImageDescription:ScheduledJob.MaxRequestsPerMinute" value="20" />20 is default, add value="0" to get maximum throughput.

If you have a lot of images, and think maybe the job will not finish in one go, you may add the interface IAnalyzableImage (included in the NuGet) to your image ContentType. It will add a bool property ImageAnalysisCompleted that will be used to keep track of which images are processed, and which is not.

If you plan using the approach with the interface, add the interface to your Image content type before uploading new images. In that way, the new images will get the correct status, and changes will not be overwritten.

Translation caching

Translations are specified using parameters to the attributes. The translations are cached per image so that if you e.g. use the AnalyzeImageForTags attribute for both a string-property and a List<string>-property on the same image, the translation request will only be sent to Azure Cognitive Services Translator Text API once.

Creating your own attributes

New attributes may be created inheriting from the abstract base class BaseImageDetailsAttribute. You must then override any of the following attributes, to indicate what kind of resources your attribute needs.

/// <summary>

/// Flag if the Update method needs imageAnalyzerResult to be populated.

/// </summary>

public virtual bool AnalyzeImageContent => false;

/// <summary>

/// Flag if the Update method needs ocrResult to be populated.

/// </summary>

public virtual bool AnalyzeImageOcr => false;

/// <summary>

/// Flag if the Update method needs translationService to be populated.

/// </summary>

public virtual bool RequireTranslations => false;If you rely on data from the Computer Vision API, override AnalyzeImageContent and return true. If you rely on OCR data, override AnalyzeImageOcr and return true. If you are using the Translation API, override RequireTranslations and return true.

Finally implement the Update method, where you inspect the image analysis result and update the property decorated with your attribute.

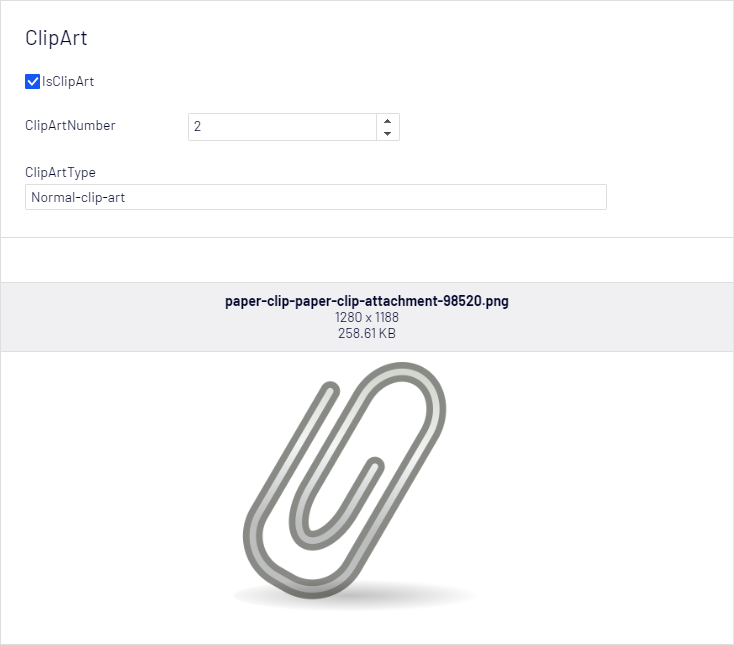

An example implementation of a custom attribute

This attribute will analyze the image and check if it's a Clip Art. The attribute may be added to three different property types.

/// <summary>

/// Analyze image, check if it is a Clip Art.

/// </summary>

public class AnalyzeImageForClipArtAttribute : BaseImageDetailsAttribute

{

public override bool AnalyzeImageContent => true;

public override void Update(PropertyAccess propertyAccess, Microsoft.Azure.CognitiveServices.Vision.ComputerVision.Models.ImageAnalysis imageAnalyzerResult, OcrResult ocrResult, TranslationService translationService)

{

if (imageAnalyzerResult.ImageType == null)

{

return;

}

if (IsBooleanProperty(propertyAccess.Property))

{

propertyAccess.SetValue(imageAnalyzerResult.ImageType.ClipArtType > 1);

}

else if (IsIntProperty(propertyAccess.Property))

{

propertyAccess.SetValue(imageAnalyzerResult.ImageType.ClipArtType);

}

else if (IsStringProperty(propertyAccess.Property))

{

propertyAccess.SetValue(GetClipArtDescription(imageAnalyzerResult.ImageType.ClipArtType));

}

}

// https://docs.microsoft.com/en-us/azure/cognitive-services/computer-vision/concept-detecting-image-types

private static object GetClipArtDescription(int imageTypeClipArtType)

{

switch (imageTypeClipArtType)

{

case 0: return "Non-clip-art";

case 1: return "Ambiguous";

case 2: return "Normal-clip-art";

case 3: return "Good-clip-art";

default: return "";

}

}

}Example:

public class ClipArtBlock : BlockData

{

[AnalyzeImageForClipArt]

public virtual bool IsClipArt { get; set; }

[AnalyzeImageForClipArt]

public virtual int ClipArtNumber { get; set; }

[AnalyzeImageForClipArt]

public virtual string ClipArtType { get; set; }

}

Image by OpenIcons from Pixabay

Related blog posts

Did you notice that the string properties in my screen shots are wider than normal? Read this post, to see how it's done.

Did you see the image preview in all properties mode? Read this post, to see how it's done.

Do you wonder why you would need the image description in multiple languages? Read this post.

How to get it

The source code is available on GitHub. Feel free to report an issue, or create a pull request.

The module may be installed from Episervers NuGet feed, just search for «Gulla.Episerver.AutomaticImageDescription».